Job Title: Senior Data Engineer

Location: Fully Remote (India)

Job Type: Payroll

Salary: ₹13 – ₹26 Lakh/Year (Annual Salary)

Duration: Long-term

Company Overview:

Agivant is a forward-thinking technology company focused on empowering data-driven decision-making across the organization. We are building a world-class data team and are on the lookout for a passionate and skilled Senior Data Engineer who thrives in a fast-paced, innovative environment.

Key Responsibilities:

- 🔧 Design, Develop & Maintain Data Pipelines

- Build robust, scalable, and efficient data pipelines for both ELT (Extract, Load, Transform) and ETL (Extract, Transform, Load) processes.

- Ensure high standards for data accuracy, completeness, and timeliness in all pipelines.

- 🧠 Data Modeling & Requirement Gathering

- Collaborate with business stakeholders to gather data requirements and translate them into optimized data models and processing workflows.

- ⚙️ Work with Modern Data Stack

- Utilize tools like Apache Airflow, Elastic Search, Snowflake, AWS S3, and NFS to construct and maintain robust data infrastructure.

- Support analytics and reporting needs by designing and maintaining data warehouse schemas.

- ✅ Implement Quality & Monitoring

- Develop automated data quality checks to maintain data integrity and proactively detect issues.

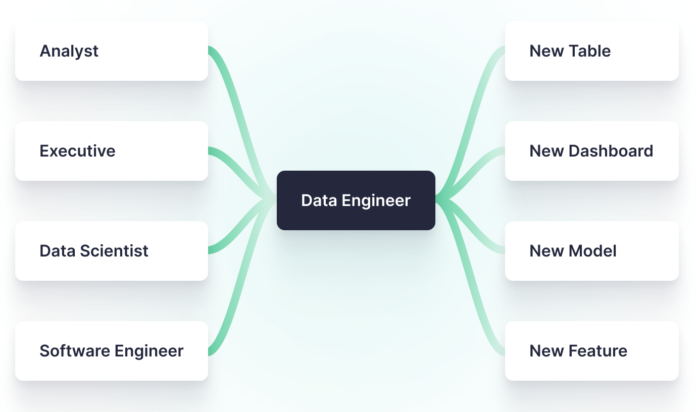

- 🤝 Cross-functional Collaboration

- Partner with data scientists, analysts, and engineers to ensure that data is accessible, clean, and usable for diverse analytical use cases.

- 📈 Architectural Enhancements

- Contribute to the design and improvement of the company’s overall data warehouse architecture and performance tuning.

- 🔄 Adopt Best Practices

- Stay up to date with the latest industry standards, including CI/CD, DevSecFinOps, Scrum, and emerging technologies in data engineering.

Must-Have Skills & Experience:

- 🎓 Bachelor’s degree in Computer Science, Engineering, or a related technical field.

- 📊 5+ years of experience as a Data Engineer with strong expertise in ETL/ELT processes.

- ❄️ 3+ years of hands-on experience with Snowflake data warehousing.

- ☁️ Experience working with cloud-based storage systems such as AWS S3.

- 🐍 3+ years of professional-level experience with Python, specifically for data manipulation, automation, and scripting.

- ⏳ 3+ years of experience creating and maintaining Apache Airflow workflows.

- 🔎 Working knowledge of Elastic Search (minimum 1 year) and its integration into data pipelines.

- 💾 Familiarity with NFS and other file storage systems.

- 💡 Strong understanding of SQL and data modeling techniques.

- 🧩 Excellent problem-solving skills and the ability to think analytically.

- 💬 Strong communication and collaboration skills to work across functional teams.

Preferred Industry Tags:

- Data Engineer (Software and Web Development)

- DevOps Engineer

- Technical Specialist (Information Design & Documentation)

- Database Administrator

- Cloud Architect